Today at the Hot Chips 24 conference, George Chrysos discussed the Intel MIC (Many Integrated Core) architecture of the Knights Bridge chip, to be formally called the Intel Xeon Phi coprocessor. This chip runs Linux, but it’s designed to act as a coprocessor to one of Intel’s Xeon server processors. Because this was not a formal product announcement, Intel is being coy about just how many processors there are on the device but it is saying “more than 50.” These are x86 processors augmented with wide SIMD engines and you program them with x86 software libraries, compilers, debuggers, and other established software-development tools from the extensive Intel software ecosystem.

The target applications for the Intel Xeon Phi coprocessor are massively parallel HPC (high-performance computing) problems that are currently solved using large server clusters. In a very real sense, the Intel Xeon Phi coprocessor is architected as just such a cluster. The “more than 50” processor cores are networked using an on-chip, high-speed ring interconnect that also ties in the chip’s many GDDR5 graphics DRAM controllers and tag directories. There is one tag directory per processor core and these directories are used to see if the desired data resides anywhere on the chip in the case of a local L2 cache miss. Here’s a simplified block diagram of the Intel Xeon Phi coprocessor:

Note that this block diagram is not meant to be numerically accurate in that it doesn’t necessarily show exactly how many processor cores, how many GDDR5 graphics DRAM controllers, or how many tag directories there are on a chip. However, there is just one PCIe interface that links the chip to the host Xeon server system. Although the physical system link is PCIe, it runs TCPIP so that the entire chip looks like a familiar computing cluster.

Quite a lot of thought has gone into managing the power of this device. Individual processor cores can be power gated on and off although the L2 cache needs to stay alive. If all of the processor cores are powered off, the chip automatically disables the clock to the individual L2 caches, the tag directories, and the GDDR graphics memory controllers because the chip can do no useful work with all of the processor cores powered down.

Intel has benchmarked a prototype Xeon server system that includes a Xeon Phi coprocessor using the Green500 (www.green500.org) benchmarks. It ranks at number 150 out of the top 500 most energy efficient supercomputers in the world, delivering 1381 MFLOPS/W while consuming 72.9 kW.

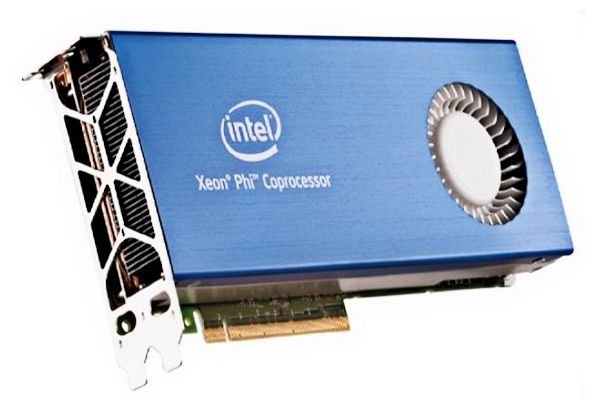

Here’s a photo of the Intel Xeon Phi coprocessor packaged on a PCIe card:

72.9kW? What’s wrong with you? Kilo-watts on a PCIe card is impossible. Take the K out!

Bapcha

Sorry for the misunderstanding but that is a system number, based on more than one coprocessor card.

Steve